About annotation

- Tasks:

- Segmenting

- Labelling

It is a common practice in Corpus Linguistics to admit that annotating is an inherently ongoing process.

Manual vs Automatic

Corpus annotation "can be defined as the practice of adding interpretative, linguistic information to an electronic corpus of spoken and/or written language data. 'Annotation' can also refer to the end-product of this process" (Leech, 1997).

It is a common practice in Corpus Linguistics to admit that annotating is an inherently ongoing process.

Manual vs Automatic

Linguistics data are annotated several times by one or several annotators, each one annotates according to his/her knowledge, beliefs and uncertainty.

On the one hand, some annotations can be assigned without any doubt; and this clearly indicates that some properties about the field exist.

Most of automatic annotation systems includes a decision-making procedure to deliver a final result, based on scores assigned to a set of possible annotations.

The score often corresponds to the reliability of a solution according to a model.

Close scores could be interpreted as an uncertainty of the decision-making procedure according to a given model.

Uncertainty: The lack of certainty. A state of having limited knowledge where it is impossible to exactly describe the existing state, a future outcome, or more than one possible outcome.

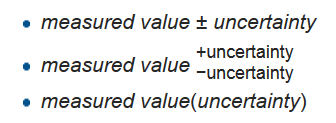

In metrology, physics, and engineering, the uncertainty or margin of error of a measurement is stated by giving a range of values likely to enclose the true value. This may be denoted by error bars on a graph, or by the following notations:

"You cannot be certain about uncertainty" (Frank Knight, economist at University of Chicago)

"You are uncertain, to varying degrees, about everything in the future; much of the past is hidden from you; and there is a lot of the present about which you do not have full information. Uncertainty is everywhere and you cannot escape from it." (Dennis Lindley, Understanding Uncertainty, 2006)

If some annotation does not easily fit into an existing theory, it is likely to be something linguistically interesting and worthy of attention.

For automatic annotations, modelling variances and indeterminacy of an automatic system will reduce the ambiguity in interpreting and applying the resulting annotations.

For manual annotations, keeping information about the annotator's uncertainty is motivated by three important factors:

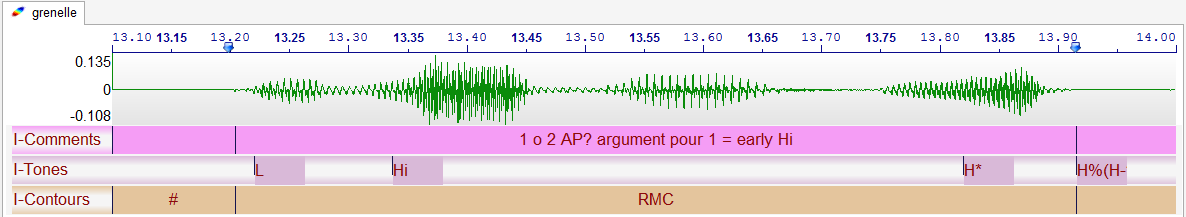

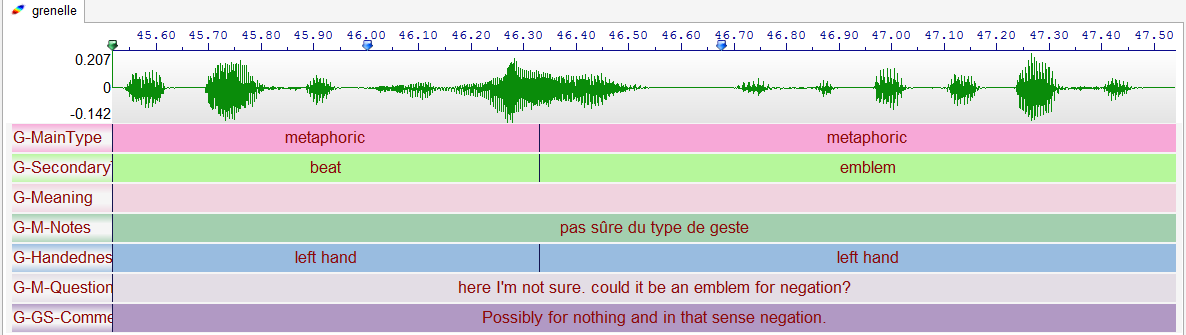

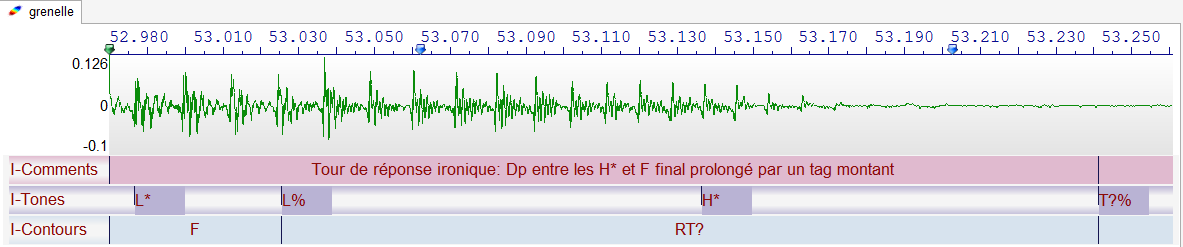

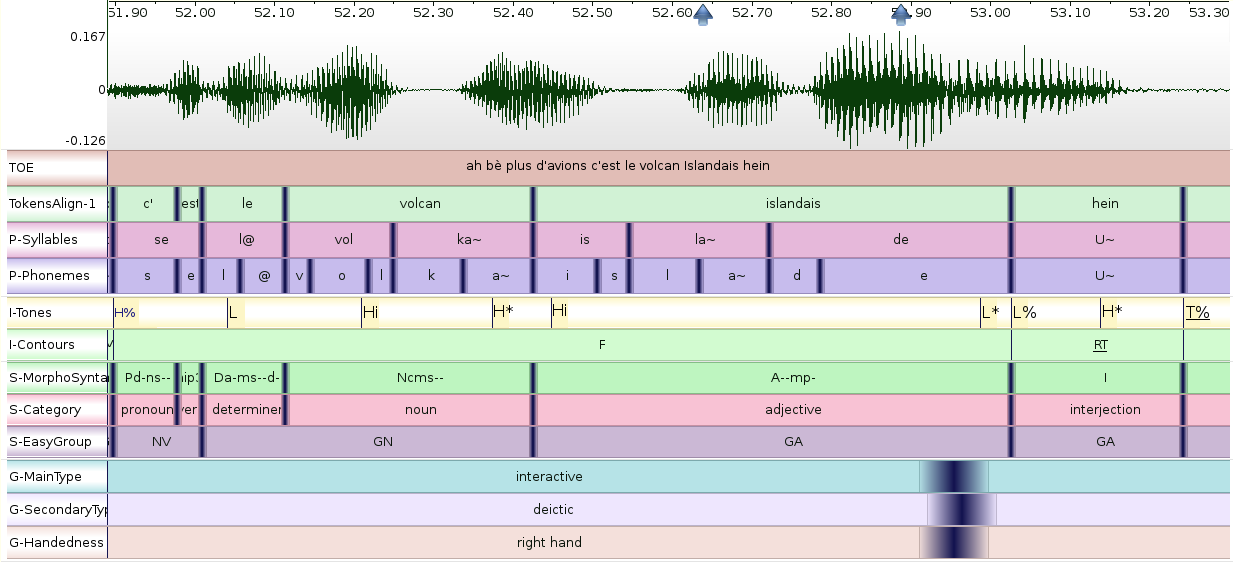

The annotation of recordings is concerned by many Linguistics sub-fields as Phonetics, Prosody, Gestures or Discourse...

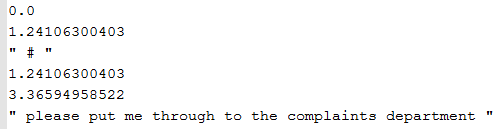

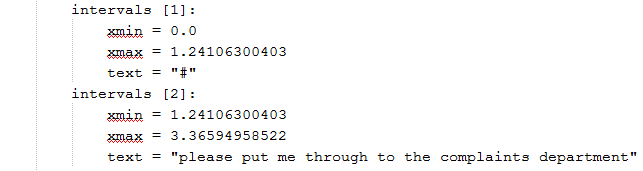

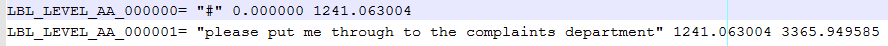

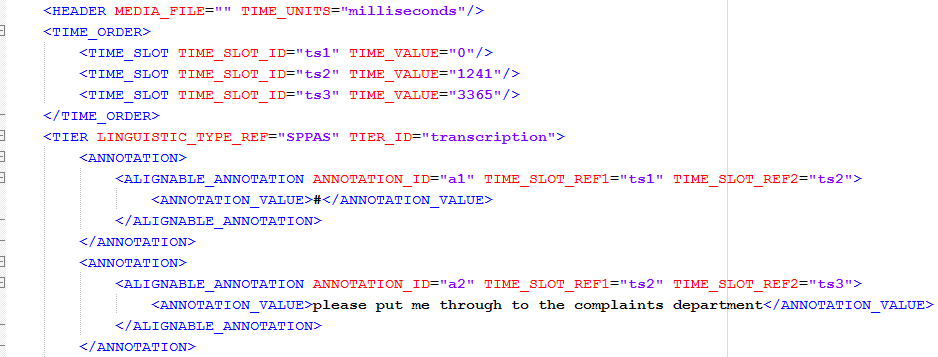

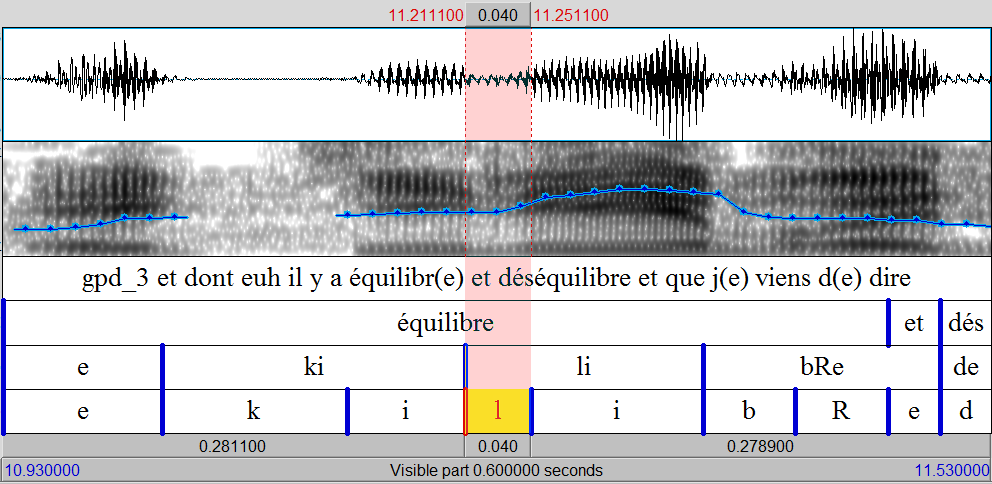

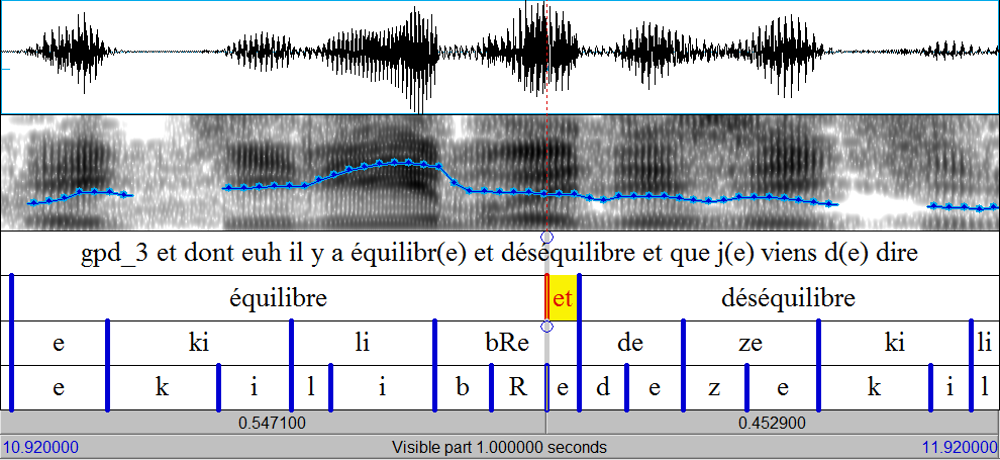

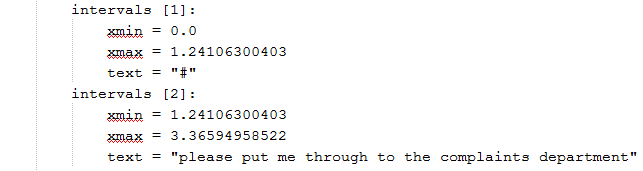

Corpora are annotated with detailed information at various linguistic levels thanks to annotation software(s).

None of the existing audio/video annotation software allows to represent the annotator's uncertainty.

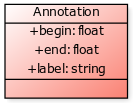

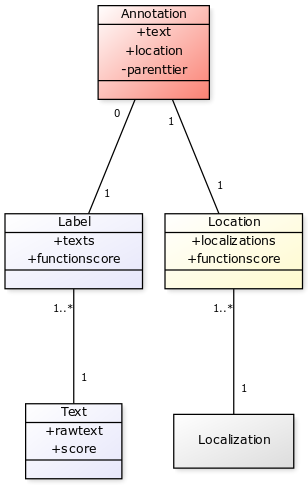

The suggestion that a concept can be adequately represented only by these information is false, as is well-known to anyone who has had to model both time and label.

After all, if they can be identified, they can be modelled.

A general speech annotation framework therefore needs to allow the representation of uncertainty, for these annotations to become part of the framework itself.

vs

vs

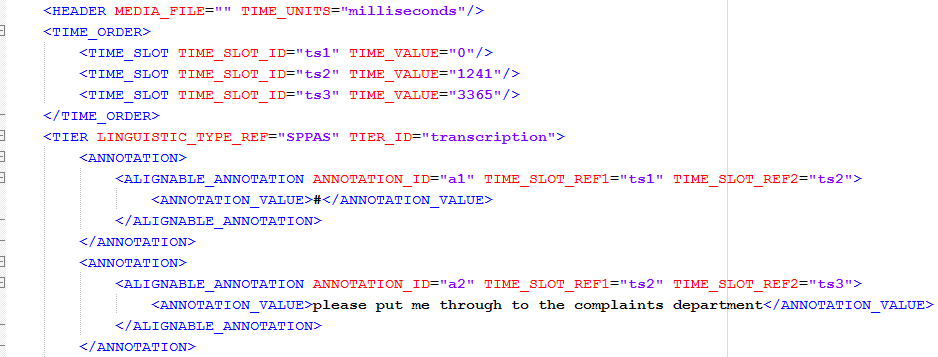

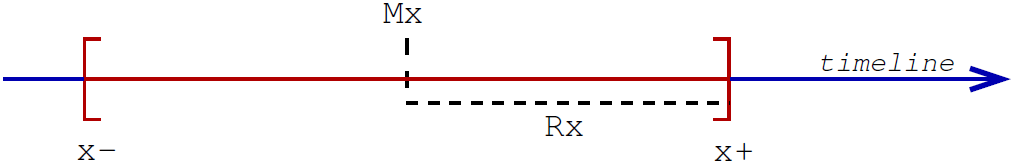

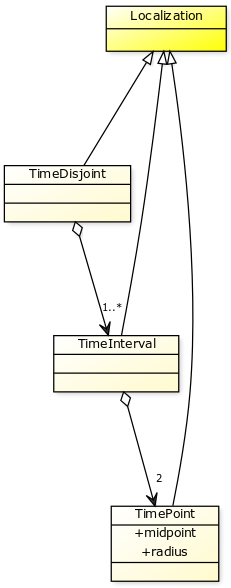

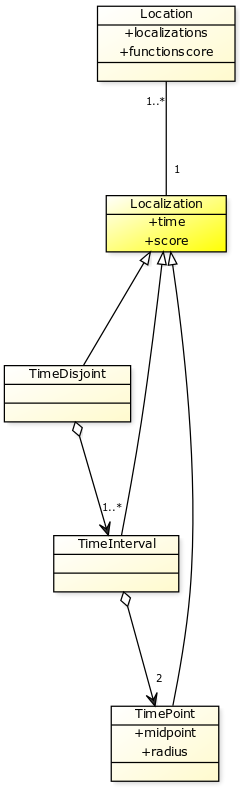

Our proposal is to define a TimePoint \(X\) as an imprecise value ranging from a time value \(x^-\) to a time value \(x^+\).

This proposal solves the 4 problems mentionned previously

It allows the annotator to annotate the localization of an interval as "the annotation starts about here, and ends about there".

It may in some cases prevent the analyst from drawing wrong conclusions unsupported by the original data.

The context sentences in (1) and (2) contain two possible referents for the pronoun 'He', one that appears in subject position and fills the Source thematic role, and one that appears as the object of a prepositional phrase and fills the Goal thematic role.

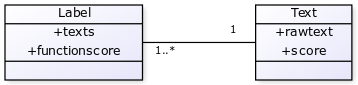

Allow a multiple label selection and assign a score to each possible label.

Notice that the label is then a list of pairs text/score but not a 2-tuples: two labels are equals if their texts are equals regardless of their scores.

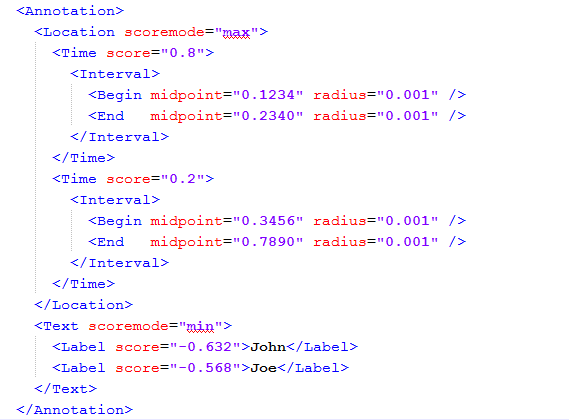

An ambiguous label, at an ambiguous location with imprecise time localizations:

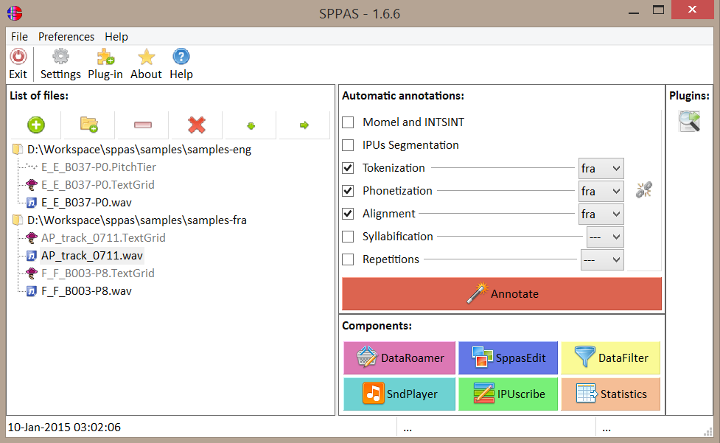

Several programs were created in the scope of this work, particularly to enable the integration of annotation/analysis, and to support resource conversion and exploitation.

The proposed framework is implemented using the programming language Python.

The API is included in SPPAS.

The automatic annotations included in SPPAS are currently using the MidPoint/Radius representation

A (prototype of) query system is available

Our proposal only relies on the representation of an Annotation and consequently it could be an extension of many existing annotation tool or scheme.